One of the most exciting aspects of deep learning’s emergence in computer vision a few years ago was that it didn’t appear to require any feature engineering, unlike previous techniques like histograms-of-gradients or Haar cascades. As neural networks ate up other fields like NLP and speech, the hope was that feature engineering would become unnecessary for those domains too. At first I fully bought into this idea, and saw any remaining manually-engineered feature pipelines as legacy code that would soon be subsumed by more advanced models.

Over the last few years of working with product teams to deploy models in production I’ve realized I was wrong. I’m not the first person to raise this idea, but I have some thoughts I haven’t seen widely discussed on exactly why feature engineering isn’t going away anytime soon. One of them is that even the original vision case actually does rely on a *lot* of feature engineering, we just haven’t been paying attention. Here’s a quote from a typical blog post discussing image models:

“a deep learning system is a fully trainable system beginning from raw input, for example image pixels“

(Emphasis added by me)

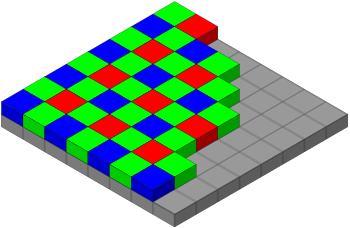

I spent over a decade working on graphics and image processing, so the implicit assumption that the kinds of images we train networks on are at all “raw” always bothered me a bit. I was used to starting with truly RAW image files to preserve as much information from the original scene as possible. These formats reflect the output of the camera’s CCD hardware pretty closely. This means that the values for each pixel correspond roughly linearly to the number of photons hitting the detector at that point, and the position of each measured value is actually in a Bayer pattern, rather than a simple grid of pixels.

So, even to get to the kind of two-dimensional array of evenly spaced pixels with RGB values that ML practitioners expect an image to contain, we have to execute some kind of algorithm to resample the original values. There are deep learning approaches to this problem, but it’s clear that this is an important preprocessing step, and one that I’d argue should count as feature engineering. There’s a whole world of other transformations like this that have to be performed before we get what we’d normally recognize as an image. These include some very complex and somewhat arbitrary transformations like white balancing, which everyday camera users might only become aware of during an apocalypse. There are also steps like gamma correction, which take the high dynamic ranges possible for the CCD output values (which reflect photon counts) and scale them into numbers which more closely resemble the human eye’s response curve. Put very simplistically, we can see small differences in dark areas with much more sensitivity than differences in bright parts, so to represent images in an eight-bit byte it’s convenient to apply a gamma curve so that more of the codes are used for darker values.

I don’t want this to turn into an image processing tutorial, but I hope that these examples illustrate that there’s a lot of engineering happening before ML models get an image. I’ve come to think of these steps as feature engineering for the human visual system, and see deep learning as piggy-backing on all this work without realizing it. It makes intuitive sense to me that models benefit from the kinds of transformations that help us recognize objects in the world too. My instinct is that gamma correction makes it a lot easier to spot things in natural scenes, because you’d hope that the differences between two materials would remain roughly constant regardless of lighting conditions, and scaling the values keeps the offsets between the colors from varying as widely as they would with the raw measurements. I can easily believe that neural networks benefit from this property just like we do.

If you accept that there is a lot of hidden feature engineering happening behind the scenes even for the classic vision models, what does this mean for other applications of deep networks? My experience has been that it’s important to think explicitly about feature engineering when designing models, and if you believe your inputs are raw, it’s worth doing a deep dive to understand what’s really happening before you get your data. For example, I’ve been working with a team that’s using accelerometer and gyroscope data to interpret gestures. They were getting good results in their application, but thanks for supply-chain problems they had to change the IMU they were using. It turned out that the original part included sensor fusion to produce estimates of the device’s absolute orientation and that’s what they were feeding into the network. Other parts had different fusion algorithms which didn’t work as well, and even trying software fusion wasn’t effective. Some problems included significant lag responding to movement and biases that sent the orientation way off over time. We switched the model to using the unfused accelerometer and gyroscope values, and were able to get back a lot of the accuracy we’d lost.

In this case, deep learning did manage to eat that part of the feature engineering pipeline, but because we didn’t have a good understanding of what was happening to our input data before we started we ended up spending extra time having to deal with problems that could have been more easily handled in the design and prototyping phase. Also, I don’t have the knowledge of accelerometer hardware but I wouldn’t be at all surprised if the “raw” values we’re now using have actually been through some significant processing.

Another area that feature engineering has surprised me with its usefulness is around labeling and debugging data problems. When I was working on building a more reliable magic wand gesture model, I was getting very frustrated with my inability to tell if the training data I was capturing from people was good enough. Just staring at six curves of the acceleration and gyroscope X, Y, Z values over time wasn’t enough for me to tell if somebody had actually performed the expected gesture or not. I thought about trying to record video of the contributors, but that seemed a lot to ask. Instead, I put some work into reconstructing the absolute position and movement from the “raw” values. This effectively became an extremely poor man’s version of sensor fusion, but focused on the needs of this particular application. I was not only able to visualize the data to check its quality, I started feeding the rendered results into the model itself, improving the accuracy. It also had the side-benefit that I could display an intuitive visualization of the gesture as seen by the model back to the user, so that they could gain an understanding of why it failed to recognize some attempts and learn to adapt their movements to be clearer from the model’s perspective!

I don’t want to minimize deep learning’s achievements in reducing the toil involved in building feature pipelines, I’m still constantly amazed at how effective they are. I would like to see more emphasis put on feature engineering in research and teaching though, since it’s still an important issue that practitioners have to wrestle with to successfully deploy ML applications. I’m hoping this post will at least spark some curiosity about where your data has really been before you get it!