Photo by Stuart Pilbrow

I'll admit it, I was intimidated by MapReduce. I'd tried to read explanations of it, but even the wonderful Joel Spolsky left me scratching my head. So I plowed ahead trying to build decent pipelines to process massive amounts of data without it. Finally my friend Andraz staged an intervention after I proudly described my latest setup: "Pete, that's Map Reduce".

Sure enough, when I looked at MR again, it was almost exactly the same as the process I'd ended up with. Using Amazon's Elastic Map Reduce implementation of Hadoop, I was literally able to change just the separator character I use on each line between the keys and the data (they use a tab, I used ':'), and run my existing PHP code as-is.

I still hate the existing explanations, none of them clicked at all, so I decided to put together a simple project and tutorial that explains in a way that makes sense to me. Here's the project code, with some sample data.

The first thing to understand is that MapReduce is just a way of taking fragments of information about an object scattered through a big input file, and collecting them so they're next to each other in the output. For example, imagine you had a massive set of files containing the results of a web crawl, and you need to understand which words are used in the links to each URL. You start with:

<a href="http://foo.com">Bananas</a>

<a href="http://bar.com">Apples</a>

…

<a href="http://foo.com">Bananas</a>

…

<a href="http://foo.com">Mangoes</a>

…

and you want to end up with:

…

foo.com 2 Bananas, 1 Mangoes

bar.com 1 Apples

…

How do you do it? If the data set is small enough, you loop through it all and total up the results in an associative array. Once it's too large to fit in memory, you have to try something different.

Instead, the Map function loops through the file, and for every piece of information it finds about an object, it writes a line to the output. This line starts with a key identifying the object, followed by the information. For example, for the line <a href="http://foo.com">Bananas</a> it would write

foo.com Bananas

How does this help? The crucial thing I missed in every other explanation is that this collection of all the output lines is sorted, so that all the entries starting with foo.com are next to each other. This was exactly what I was doing with my sort-based pipeline that Andraz commented on. You end up with something like this:

…

foo.com Bananas

foo.com Bananas

foo.com Mangoes

…

The Reduce step happens immediately after the sort, and since all the information about an object is in adjacent lines, it's obviously pretty easy to gather it into the output we're after, no matter how large the file gets.

None of this requires any complex infrastructure. If you download the project you'll see a couple of one-page PHP files, one implementing a Map step, the other Reduce, which you can run from the command line simply using:

./mapper.php < input.txt | sort | ./reducer.php > output.txt

To prove I'm not over-simplifying, you can take the exact same PHP files, load them into Amazon's Elastic Map Reduce service as-is and run them to get the same results! I'll describe the exact Job Flow settings at the bottom so you can try this yourself.

The project itself takes 1200 Twitter messages either written by me, or mentioning me, and produces statistics on every user showing how often and when we exchanged public messages. It's basically a small-scale version of the algorithm that powers the twitter.mailana.com social graph visualization. One feature of note is the reducer. It tries to merge adjacent lines containing partial data in JSON format into a final accumulated result, and I've been using this across a lot of my projects.

Here's how to try this all out on Amazon's Elastic Map Reduce:

– First, get all your AWS accounts set up. You'll need S3, EC2 and MapReduce.

– Now, create an S3 bucket with a unique name to contain the results.

– Go to the MapReduce console and click on Create New Job Flow

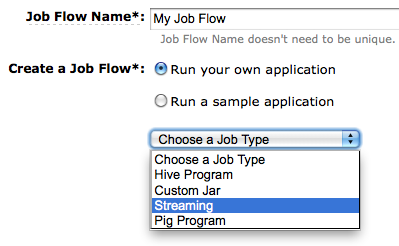

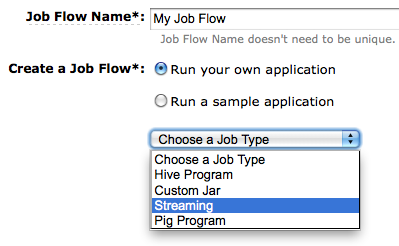

– As you go through the creation panel, copy the settings shown below. Make sure you put in the path to your own output bucket, but I've made both the input data and code buckets public, so you can leave those paths as-is:

Run the job, give it a few minutes to complete, and you should see a file called part-00000 in your output bucket. Congratulations, you've just run your first Hadoop MapReduce data analysis!

Now for the bad news. Google's just been awarded a patent on this technique, casting a shadow over Hadoop and pretty much every company doing serious data analysis. I personally think if a knucklehead like me can independently invent the process, it should be considered so obvious no patent should be possible!