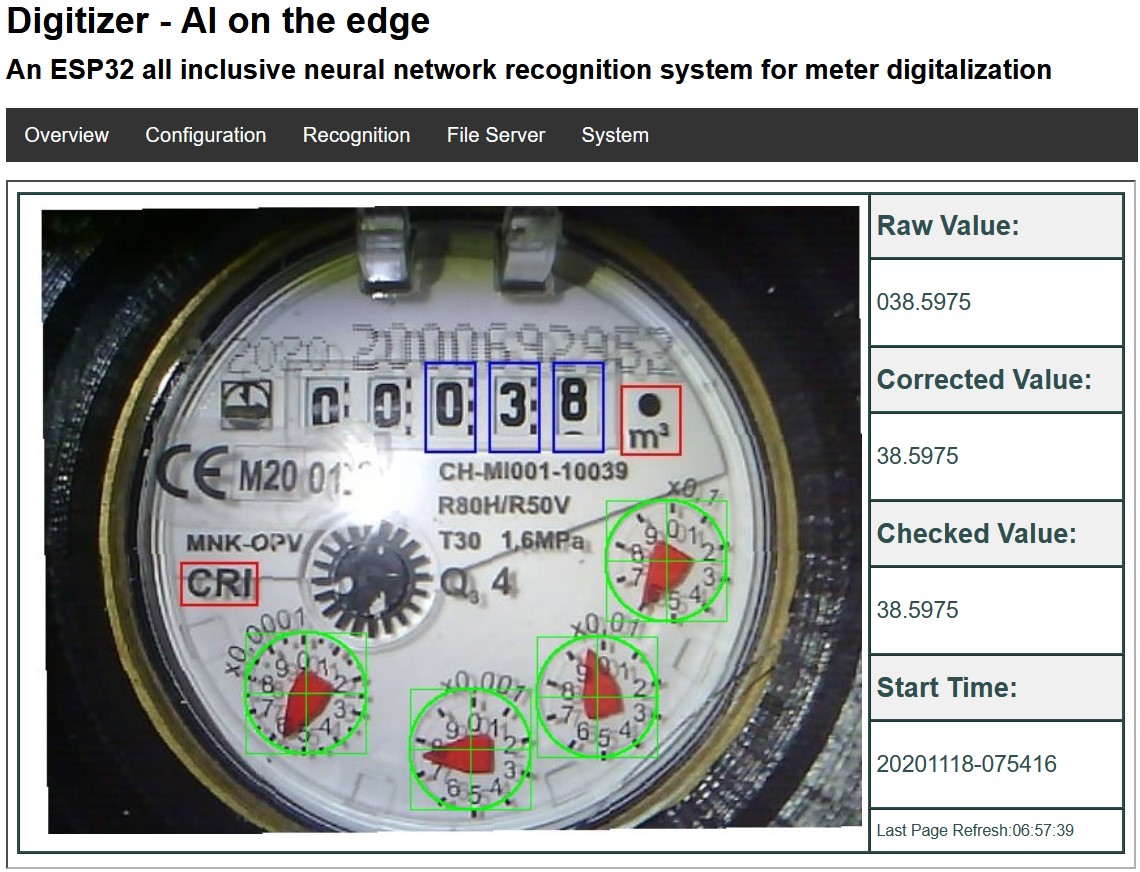

This image shows a traditional water meter that’s been converted into a web API, using a cheap ESP32 camera and machine learning to understand the dials and numbers. I expect there are going to be billions of devices like this deployed over the next decade, not only for water meters but for any older device that has a dial, counter, or display. I’ve already heard from multiple teams who have legacy hardware that they need to monitor, in environments as varied as oil refineries, crop fields, office buildings, cars, and homes. Some of the devices are decades old, so until now the only option to enable remote monitoring and data gathering was to replace the system entirely with a more modern version. This is often too expensive, time-consuming, or disruptive to contemplate. Pointing a small, battery-powered camera instead offers a lot of advantages. Since there’s an air gap between the camera and the dial it’s monitoring, it’s guaranteed to not affect the rest of the system, and it’s easy to deploy as an experiment, iterating to improve it.

If you’ve ever worked with legacy software systems, this may all seem a bit familiar. Screen scraping is a common technique to use when you have a system you can’t easily change that you need to extract information from, when there’s no real API available. You take the user interface results for a query as text, HTML, or even an image, ignore the labels, buttons, and other elements you don’t care about, and try to extract the values you want. It’s always preferable to have a proper API, since the code to pull out just the information you need can be hard to write and is usually very brittle to minor changes in the interface, but it’s an incredibly common technique all the same.

The biggest reason we haven’t seen more adoption of this equivalent approach for IoT is that training and deploying machine learning models on embedded systems has been very hard. If you’ve done any deep learning tutorials at all, you’ll know that recognizing digits with MNIST is one of the easiest models to train. With the spread of frameworks like TensorFlow Lite Micro (which the example above apparently uses, though I can’t find the on-device code in that repo) and others, it’s starting to get easier to deploy on cheap, battery-powered devices, so I expect we’ll see more of these applications emerging. What I’d love to see is some middleware that understands common displays types like dials, physical or LED digits, or status lights. Then someone with a device they want to monitor could build it out of those building blocks, rather than having to train an entirely new model from scratch.

I know I’d enjoy being able to use something like this myself. I’d use a cell-connected device to watch my cable modem’s status, so I’d know when my connection was going flaky, I’d keep track of my mileage and efficiency with something stuck on my car’s dash board looking at the speedometer, odometer and gas gauge, it would be great to have my own way to monitor my electricity, gas, and water meters, I’d have my washing machine text me when it was done. I don’t know how I’d set it up physically, but I’m always paranoid about leaving the stove on, so something that looked at the gas dials would put my mind at ease.

There’s a massive amount of information out in the real world that’s can’t be remotely monitored or analyzed over time, and a lot of it is displayed through dials and displays. Waiting for all of the systems involved to be replaced with connected versions could take decades, which is why I’m so excited about this incremental approach. Just like search engines have been able to take unstructured web pages designed for people to read, and index them so we can find and use them, this physical version of screen-scraping takes displays aimed at humans and converts them into information usable from anywhere. A lot of different trends are coming together to make this possible, from cheap, capable hardware, widespread IoT data networks, software improvements, and the democratization of all these technologies. I’m excited to do my bit to hopefully help make this happen, and I can’t wait to see all the applications that you all come up with, do let me know your ideas!

Pingback: Parramatta Female Factory, Intelligence Agency Oversight, Gmail, More: Monday Afternoon ResearchBuzz, March 1, 2021 – ResearchBuzz

Hi Pete,

thanks for refering to my device. You can find the TensorFlow Lite Micro code in tfmicro component of my code (https://github.com/jomjol/AI-on-the-edge-device/tree/master/code/components/tfmicro). There is also a GitHub about the training of the neural networks, which you find a link in the wiki page of the main project, but this is rather very basic.

Main purpuse is indeed the cheap and easy convertion of old style information for digital processing

Best regards,

jomjol

Hi, would it work to read video frames and compare to some stored frames? (detect people on a security cam, for ex)

In case you know of such solution, please let me know. Most security motion sensors give lots of false alarms, so I thought about a camera I could ‘teach’ what the normal scene is, so it can call me when people show up in the frame.

Zero knowledge of AI or ML here, but some ideas on better security systems.

Pingback: Radar trends to watch: April 2021 – O’Reilly (via Qpute.com) – Quantum Computing

Pingback: Radar trends to watch: April 2021 – O’Reilly

Pingback: Tendances radar à surveiller: avril 2021 – O’Reilly | Formation DIVI

Pingback: Radar trends to watch: April 2021 – Nasni Consultants

Pingback: アナログなメーターをデジタルなネットワークに接続する – 秋元@サイボウズラボ・プログラマー・ブログ

Pingback: Radar trends to watch: April 2021 – O’Reilly | DISHA TECHNOLOGY

Pingback: Radar tendencies to observe: April 2021 – O’Reilly - WIDE INFO

Pingback: Why cameras are soon going to be everywhere « Pete Warden's blog