When I released the Speech Commands dataset and code last year, I was hoping they would give a boost to teams building low-energy-usage hardware by providing a realistic application benchmark. It’s been great to see Vikas Chandra of ARM using them to build keyword spotting examples for Cortex M-series chips, and now a hardware startup I’ve been following, Green Waves, have just announced a new device and shared some numbers using the dataset as a benchmark. They’re showing power usage numbers of just a few milliwatts for an always-on keyword spotter, which is starting to approach the coin-battery-for-a-year target I think will open up a whole new world of uses.

I’m not just excited about this for speech recognition’s sake, but because the same hardware can also accelerate vision, and other advanced sensor processing, turning noisy signals into something actionable. I’m also fascinated by the idea that we might be able to build tiny robots with the intelligence of insects if we can get the energy usage and mass small enough, or even send smart nano-probes to nearby stars!

Neural networks offer a whole new way of programming that’s inherently a lot easier to scale down than conventional instruction-driven approaches. You can transform and convert network models in ways we’ve barely begun to explore, fitting them to hardware with few resources while preserving performance. Chips can also take a lot of shortcuts that aren’t possible with traditional code, like tolerating calculation errors, and they don’t have to worry about awkward constructs like branches, everything is straight-line math at its heart.

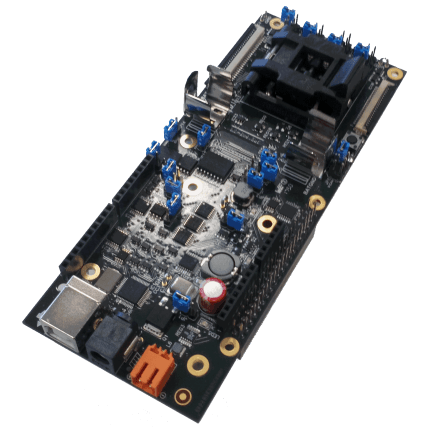

I’ve put in my preorder for a GAP8 developer kit, to join the ARM-based prototyping devices on my desk, and I’m excited to see so much activity in this area. I think we’re going to see a lot of progress over the next couple of years, and I can’t wait to see what new applications emerge as hardware capabilities keep improving!

Can you put this in perspective with other technologies? For example vs. FPGAs, Neuromorphic processors like IBM’s TrueNorth chip? Power requirements? Some sort of appropriate neural network capability metrics?

I’m also wondering if we are not repeating the same sort of processor arms races as we have seen before – e.g. Lisp processors in the 80s, Java processors in the 90s, both of which proved dead ends. Is the same likely to happen with dedicated NN processors vs general purpose processors like the ARM chip?

Hi @Petewarden (or others), do you have the actual HW on your hands? I ordered one of their dev kits months back but it has not been shipped till now. Wondering if anyone else has played with this hands-on.

I am very interested in this type of technology, but a bit dissapointed by their delays.

Giri